| http://wearcam.org/seatsale/index.htm |

|

|||

|

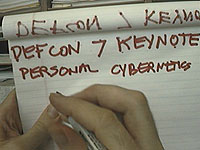

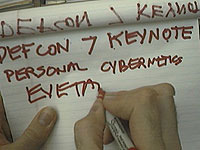

I am a document camera My becoming a document camera arose quite naturally during some of the large number of invited lectures I was asked to give. I'd typically use a video projector, and plug the projector into my body, making the presentation from the computer attached to my body. Most of my presentations I prepared as WWW pages, with links to my main Web pages, so that giving a presentation was a simple act of showing the audience a series of Web pages. Now since I wear (or, in the more existential sense, "am'') an embodiment of my Wearable Wireless Webcam apparatus, one of the Web pages I show to the audience is my "Put yourself in my shoes and see the world from my perspective'' page. I found that what for me seemed quite typical, was shocking, or at least surprising to the audience, especially during question and answer period when I would walk around in the audience, and talk to people one--on--one, transmitting our conversation to the projection television screen at the front. However, perhaps the most useful outcome was of course the fact that I could hold onto a small notepad, and look at the notepad, and of course the whole audience could see the notepad as well. Often during presentations, I would pace around the room, and walk out into the audience, and even sit down in a chair, as if I were a member of the audience, while giving the Keynote Address at a major conference or symposium. Being me instead of seeing me In many ways, I began to simply give lectures to myself, and transmit these to the big screen at the front of the room. Sometimes when giving a Keynote Address, I would leave the room while I was giving the address --- giving the address to myself, so that the audience could no longer see me. Of course the audience could still hear me speaking, over the PA system which was responsive to an output of my wearable apparatus, and of course the audience could still see out through my right eye (or sometimes my left eye) which was tapped, and transmitted to the large screen television.Thus after a while, I began to wonder why I was actually physically present at the conference site, particularly when the number of invitations to give talks was growing. For a while, I was giving approximately three Keynote Addresses a month, and this was indeed far too much needless travel. Thus I came up with the idea that I'd do exactly the same thing as I had always done, with one minute difference: I wasn't there. Instead only my connection was there --- not my face, my avatar, or any other aspect of me, except for the existential aspect of facilitation by which the audience could vicariously be me: being me rather than seeing me. I called this style of lecture a "Vicarious Soliloquy''. It's not really a soliloquy, in the sense that it's not really an audience watching, from an external viewpoint, me give a talk to myself, but, rather the audience is vicariously experiencing me give the talk to myself. Example of Vicarious Soliloquy: DEFCON 7 An example of the Vicarious Soliloquy genre was my Keynote Address at DEFCON~7. (What is DEFCON~7?)Perhaps it's incorrect to say "I was invited to give the Keynote Address at DEFCON~7''. Instead, it would be more correct to say that "The audience of DEFCON~7 was invited to my Keynote Address'', or maybe "The audience of DEFCON~7 was invited to be my soliloquy'' (as opposed to watching my soliloquy, as might be the case in traditional cinematography in which the audience experiences a second or third person viewpoint). This excerpt from my reality stream depicts how the audience was, in

effect, able to be me, rather than see me, in the sense that a first--person

perspective was offered by the apparatus of the invention serving as the

existential document camera:

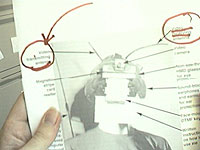

This capability adds a new dimension to videoconferencing. In addition to writing on the notepad, I also looked at (and therefore

showed the audience) as well as annotated copies of some of the historial

pictures of the apparatus of my invention:

Realitap: I am a luddite cyborg During the Question and Answer period after my Keynote Address at DEFCON~7 (July 1999), someone asked why I use paper, pens, and pencils to give my lectures. I replied "I'm a luddite'', to which the audience responded with understanding laughter. I went on to explain how modern technology has stolen away the humanistic aspects of our daily lives --- such simple pleasures as the tactile feel of chalk, or pencil---on---paper. I articulated my love of reality, (I don't like to watch TV or see movies --- I prefer reality instead), and how I was simply sharing my love of reality, through EyeTap.See me or be me? In conjunction with my DEFCON~7 Keynote, an audience member asked me if I had a mirror I could look into (e.g. wondered what I looked like). I found a beamsplitter (a transparent material with a thin optical coating on it), which I held up in front of myself, showing one of my older units that I still often wear:

Not expecting to be seen by anyone, I wore one of my messy old experimental rigs containing some parts more than 10 or 15 years old. Having worn it so many years, it's become quite comfortable, like an old pair of Levi's. But then someone in the audience asked if I had a mirror, e.g. what did I look like. I was able to find a beamsplitter with an aluminum coating, which I held up in front of myself so that the audience could both "be me'' and see me at the same time. What's needed to receive one of my Vicarious Soliloquy For someone to receive a lecture that I present in the Vicarious Soliloquy genre, the setup is simple. Put three computers in a lecture hall. Real computers! (No viruses, please.) By that, I mean something like GNUX (GNU + Linux), along with someone who's well versed in GNUX (e.g. so that I can remotely talk to that person using various UNIX tools and that person will "speak my language'').One computer goes to the big screen projector and runs a Web broswer that's pointed to my right eye (e.g. visits my EyeTap site). A second computer runs sfspeaker (SpeakFreely) and is connected to the PA system, and is responsive to the microphone array of my apparatus. A third computer runs sfmike and is set up so that I can hear the audience, their reactions to my lecture, and such. The third system sends the sound back to me, so that I can get audience feedback without acoustic feedback (e.g. I can hear the audience without getting the screech or squealing sound of positive feedback run amok). It's actually quite simple, for anyone with a reasonable degree of UNIX competence, to facilitate such a talk and connection to my apparatus. |